NAudio Wave Stream Architecture

This post explains the basic architecture for audio streaming with the NAudio .NET open source audio library. The most important concept to understand is a Wave Stream. This is simply a stream of audio data, in any format, that can be read from and repositioned. In NAudio, all Wave Streams inherit from WaveStream, which in turn inherits from System.IO.Stream. NAudio ships with a number of highly useful WaveStream derived classes, but there is nothing stopping you creating your own.

Many types of Wave Stream accept input from one or more other Wave Streams and process that data in some way. For example, the WaveChannel32 stream turns mono 16 bit WAV data into stereo 32 bit data, and allows you to adjust panning and volume at the same time. The WaveMixer32 stream can take any number of 32 bit input streams and mix them together to produce a single output. The WaveFormatConversionStream will attempt to find an ACM codec that performs the format conversion you wish. For example, you might have some ADPCM compressed audio that you wish to decompress to PCM. Another common use of WaveStreams is to apply audio effects such as EQ, reverb or compression to the signal.

Obviously, you need a starting point for your Wave data to come from. The most common options are the WaveFileReader which can read standard WAV files, and the WaveInStream which captures data from a soundcard. Other examples that you might want to create would be a software synthesizer, emitting sound according to the notes the user was playing on a MIDI keyboard.

Once you have processed your audio data, you will want to render it somewhere. The two main options are to an audio device or to a file. NAudio provides a variety of options here. The most common are WaveOut which allows playback of a WaveStream using the WinMM APIs and WaveFileWriter which can write standard WAV files.

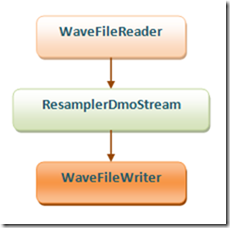

The following example shows a very simple graph which reads from one WAV file and passes the data through the DMO Resampler Wave Stream before writing it out to another WAV file. This would be all you needed to create your own WAV file resampling utility:

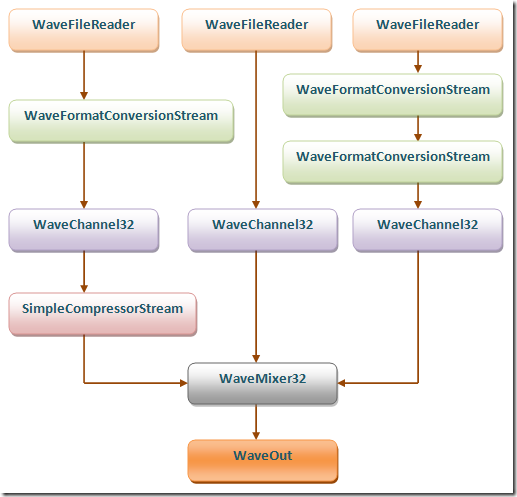

Of course, you can do much more complicated things with Wave Streams. The next example shows three WaveFileReader objects as the inputs. Two of them go through some stages of format conversion before they are all converted into IEEE 32 bit audio. Then one of the streams goes through an audio compressor effect to adjust its dynamic range, before all three streams are mixed together by WaveMixer32. The output could be processed further, but in this example it is simply played out of the soundcard using WaveOut.

Hopefully this gives a good flavour of the type of things possible with WaveStreams in NAudio. In a future post, I will explain how to create your own custom WaveStream, and I will discuss in more depth the design decisions behind the WaveStream class.

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...

Comments

hi Mark,

iamnotsmartIm really sorry if im making comments everywhere. I just dont know how to contact you. Anyway, ive noticed that in the WaveFileWriter you have to call close so that the "file" or the stream it contains will have complete WAV file/stream. now, if i use file i have no prob with Wavout Playback. However if use IO stream such as MemoryStream to get the byte array from WaveFileWriter and use it in WAVEOUT playback without WaveFileWriter.Close it is having "NO RIFF header error". I think because inorder for the stream to complete i have to call WaveFileWriter.Close. Well i DID call that procedure but the problem is im having the "STREAM already closed" errors or something thesame. is there work around with this? what im after is to be able to stream using bytearray over the network.

(and hey i finally understood the READ part of IWAVEProvider)

Thanks again for this really wonderful dll.

hi mark,

iamnotsmartive got things working already, what i did was to imitate what you did on WaveFileWriter.Close and put it inside a new procedure like writerGenerateFullSoundStream...now i am able to stream byte array to the network...and play them...thank you so much for this wonderfull DLL...

hi iamnotsmart, glad you got things working in the end. hopefully I will have the time to write some demos of this kind of thing in the future, as I get a lot of similar questions

Mark HHi Mark, I was wondering what tool did you use to draw your schemas like in this post?

AnonymousMicrosoft Word! I would love to find something better though

Mark HHaha, didn't think about it ! Thanks.

AnonymousHi Mark and iamnotsmart,

AnonymousCan you share with me how did u 2 do?? I'm new in C# and also in NAudio.. Now I ve to play back what the user write in text box. So it will be string. I changed string to memory stream..then use NAudio...But same with iamnotsmart...I got error shown that the header is not 'RIFF'.... I urgently need help..pls pls pls...

@anonymous - NAudio cannot to text to audio. You need to use the Microsoft speech engine to do that.

Mark HHi Mark,

candritzkyI cannot find the WaveInStream class mentioned in this article in the most recent version of the library (1.4RC). What is the recommended replacement if I want to convert the data from a WaveIn device to a WaveStream?

Thanks,

Chris

@candritzky - the recommended way is to put the recorded bytes into a BufferedWaveProvider. WaveInStream was never a proper WaveStream, so I renamed it to WaveIn.

Mark HI am unable to convert .wav file of IMA ADPCM format to .wav file of PCM format. Please help.

Anonymous