The Case for Durable Workflows

Regular readers of my blog will know I'm a big fan of Azure Functions, and one very exciting new addition to the platform is Durable Functions. Durable Functions is an extension to Azure Functions allowing you to create workflows (or "orchestrations") consisting of multiple functions.

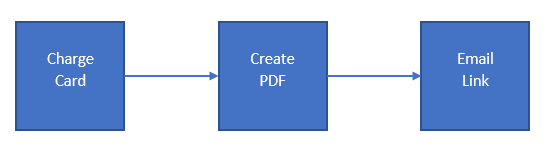

An example workflow

Imagine we are selling e-books online. When an order comes in we might have a simple three-step workflow. First, let's charge the credit card. Then, we'll create a personalized PDF of the ebook watermarked with the purchaser's email address to discourage them from sharing it online. And finally we'll email them a download link.

Why do we need Durable Functions?

This is about as simple a workflow as you can imagine, just three calls one after the other. And you might well be thinking, I can already implement a workflow like that with Azure Functions (or any other FaaS platform). Why do I need Durable Functions?

Well, let's consider how we might implement our example workflow without using Durable Functions.

Workflow within one function

The simplest implementation is to put the entire workflow inside a single Azure function. First call the payment provider, then create the PDF, and then send the email.

void MyWorkflowFunction(OrderDetails order)

{

ChargeCreditCard(order.PaymentDetails, order.Amount);

var pdfLocation = GeneratePdf(order.ProductId, order.EmailAddress);

SendEmail(order.EmailAddress, pdfLocation);

}

Now there are a number of reasons why this is a bad idea, not least of which is that it breaks the "Single Responsibility" principle. More significantly, whenever we have a workflow, we need to consider what happens should any of the steps fail. Do we retry, do we retry with backoff, do we need to undo a previous action, do we abort the workflow or can we carry on regardless?

This one function is likely to grow in complexity as we start to add in error handling and the workflow picks up additional steps.

There's also the issue of scaling - by lumping all steps together they cannot be scaled independently. And how long does this function take to run? The longer it lives for the greater the chance that the VM its running on could cycle mid-operation, leaving us with the need to somehow make the workflow steps "idempotent" so we can carry on from where we left off on a retry.

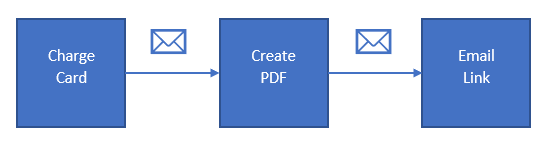

Workflow via messages

A more common way to implement a workflow like this would be to use intermediate queues between the functions. So the first function charges the credit card, and then puts a message in a queue to trigger the PDF generator function. When that function has finished it puts a message in a queue that triggers the emailing function.

This approach has a number of benefits. Each function just performs a single step in the workflow, and the use of queues means that the platform will give us a certain number of retries for free. If the PDF generation turns out to be slow, the Azure Functions runtime can spin up multiple instances that help us clear through the backlog. It also solves the problem of the VM going down mid-operation. The queue messages allow us to carry on from where we left off in the workflow.

But there are still some issues with this approach. One is that the definition of our workflow is now distributed across the codebase. If I want to understand what steps happen in what order I must examine the code for each function in turn to see what gets called next. That can get tedious pretty quickly if you have a long and complex workflow. It also still breaks the "single responsibility principle" because each function knows how to perform its step in the workflow and what the next step should be. To introduce a new step into the workflow requires finding the function before the insertion point and changing its ongoing message.

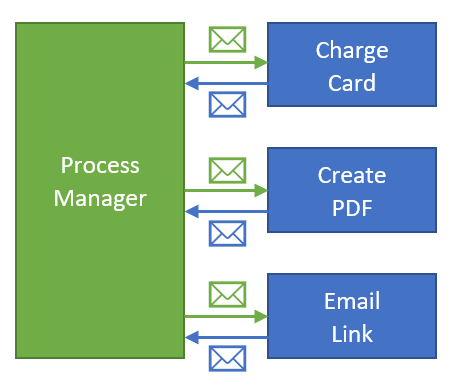

Workflow via a process manager function

Can we go better than this? Well, let's imagine that we introduce a fourth function - a "process manager" or "orchestrator". This function has the job of calling each of the three "activity" functions in turn. It does so by posting queue messages that trigger them, but when they are done, instead of knowing what the next function is, they simply report back to the orchestrator that they are finished by means of another queue message.

What this means is that the orchestrator function has to keep track of where we are in the workflow. Now it might just about be possible to achieve this without needing a database, if the messages in and out of each "activity" function keep hold of all the state. But typically in practice we'd find ourselves needing to store state in a database and lookup where we got to.

Here's a very simplistic implementation of a process manager function, that doesn't perform any error handling, and assumes all necessary state is held in the messages.

void MyProcessManagerFunction(WorkflowMessage message, Queue queue)

{

switch(message.Type)

{

case "NewOrderReceived":

queue.Send("CreditCardQueue", new ChargeCreditCardMessage(message.OrderDetails));

break;

case "CreditCardCharged":

queue.Send("GeneratePdf", new GeneratePdfMessage(message.OrderDetails));

break;

case "PdfGenerated":

queue.Send("SendEmail", new SendEmailMessage(message.OrderDetails, message.PdfLocation));

break;

case "EmailSent":

// workflow is complete

break;

}

}

This solution has several benefits over the previous two approaches we discussed, but we have now got four queues and four message types just to implement a simple three function sequential workflow. To implement error handling, our response messages would need to be upgraded to include a success flag, and if we were implementing retries, we'd want a way to count how many retries we'd implemented.

So even with this approach, things can get complex.

Durable Functions to the rescue

This is where Durable Functions comes in. Durable Functions makes it much easier to implement this kind of process manager or "orchestrator" pattern. We still have a function per "activity" and we have an "orchestrator" function, but now we don't need to manage any of the messaging between the functions ourselves. Durable Functions implements also manages workflow state for us, so we can keep track of where we are.

Even better, Durable Functions makes really creative use of the C# await keyword to allow us to write our Orchestrator function looking like it was a regular function that calls each of the three activities in turn even though what is really happening under the hood is a lot more involved. That's thanks to the fact that the Durable Functions extension is built using Microsoft's existing Durable Task Framework

Here's a slightly simplified example of what a Durable Functions orchestrator function might look like in our situation. An orchestration can receive arbitrary "input data", and call activities with CallActivityAsync. Each activity function can receive input data and return it.

async Task MyDurableOrchestrator(DurableOrchestrationContext ctx)

{

var order = ctx.GetInput<OrderDetails>();

await ctx.CallActivityAsync("ChargeCreditCard", order);

var pdfLocation = await ctx.CallActivityAsync<string>("GeneratePdf", order);

await ctx.CallActivityAsync("SendEmail", new { order.EmailAddress, pdfLocation });

}

As you can see the code now is extremely easy to read - the orchestrator is defining the workflow but not concerned with the mechanics of how the activities are actually called.

Error handling

What about error handling? This is where things get even more impressive. With Durable Functions we can easily put retry policies with custom back-offs around an individual activity function simply by using CallActivityWithRetryAsync, and we can catch exceptions wherever they occur in our workflow (whether in the orchestrator itself or in one of the activity functions) just with a regular C# try...catch block.

Here's an updated version of our function that will make up to four attempts to charge the credit card with a 30 second backoff between each one. And it also has an exception handler which could be used for logging, but equally you can call other activity functions - maybe we want to send some kind of alert to the system administrator and attempt to inform the customer that there was a problem processing their order.

async Task MyDurableOrchestrator(DurableOrchestrationContext ctx)

{

try

{

var order = ctx.GetInput<OrderDetails>();

await ctx.CallActivityWithRetryAsync("ChargeCreditCard",

new RetryOptions(TimeSpan.FromSeconds(30), 4), order);

var pdfLocation = await ctx.CallActivityAsync<string>("GeneratePdf", order);

await ctx.CallActivityAsync("SendEmail", new { order.EmailAddress, pdfLocation });

}

catch (Exception e)

{

// log exception, and call another activity function to if needed

}

}

Benefits of Durable Functions

As you can see, Durable Functions addresses all the issues with implementing this workflow manually.

- It allows us to have an orchestrator that very straightforwardly shows us what the complete workflow looks like.

- It lets us put each individual "activity" in the workflow into its own function (giving us improved scalability and resilience).

- It hides the complexity of handling queue messages and passing state in and out of functions, storing state for us transparently in a "task hub" which is implemented using Azure Table Storage and Queues.

- It makes it really easy to add retries with backoffs and exception handling to our workflows.

- It opens the door to more advanced workflow patterns, such as performing activities in parallel or waiting for human interaction (although those are topics for a future post).

So although Durable Functions are still in preview and have a few issues that need ironing out before they go live, I am very excited about their potential. Whilst it is possible to implement similar workflow management code yourself, and there are various other frameworks offering similar capabilities, this really is remarkably simple to implement and an obvious choice if you're already using Azure Functions.

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...

Comments

This is a great article, but I think your assertion that message-oriented designs violate single responsibility "because each function knows how to perform its step in the workflow and what the next step should be" is spurious. The whole point of most messaging based designs is that the sender need only know how to send the message - what happens after that could be anything.

DougWareI only point this out because sometimes a workflow is the best approach and other times messaging is the best approach and I am personally trying to decide how to choose.

The "single responsibility principle" is sometimes stated as a piece of code should "only have one reason to change". So I mean that I must change this function if I want to change how the activity behaves, and also if I want to change what the next activity in the workflow is. You can attempt to work round that by saying that each function sends an "i'm finished" message (i.e a domain event) to a topic when its done, but without adding the indirection of the "process manager" all you do is shift each activity from knowing what's next to knowing what it comes after. In either case there is still no single place you can go to to discover the definition of the whole workflow.

Mark HeathI do agree though that in some cases it's not really a "workflow" as such. You have an action that when it completes it sends out a domain event, so that other code can run as a result, but we don't really care whether anyone is listening or not. Auditing, reporting, etc are examples that might fit this category - they are not strictly part of the "workflow" but can be triggered by things that happen within a workflow.