ADC 2022 Highlights

Earlier this week I attended the Audio Developer Conference for the first time ever. In this slightly long and rambling post, I want to share some of my highlights from the event. But first, a bit about why I attended.

My background in audio programming

Audio programming has always been something I've found fascinating. The first program I ever remember writing was me attempting to make our family BBC Micro Model B play the tune "three blind mice". My first ever piece of "freeware" (I hadn't heard of open source) was an app that could load SoundFonts into the RAM on a SoundBlaster AWE (which I needed because the official Creative Labs Windows drivers were too buggy to use). And of course almost 20 years ago (9 Dec 2002) I wrote the first lines of NAudio, a project that is still going today, although I only have time for occasional updates.

For the most part, I don't do audio programming in my day job, but I did get to write the audio (& video) playback engine for an application that plays back telephony and radio calls in sync with video and screen recordings, which relied heavily on my NAudio work.

I've also created dozens, if not hundreds of smaller audio-related development projects, including writing JSFX effects for the REAPER DAW, a Silverlight audio player, lots of MIDI file processing utilities, and a bunch of web audio tools.

So I'd always wanted the chance to attend an ADC, and get to hear from the pros about what's new in the industry, as well as hopefully learning a few new things that I could put into practice.

Machine learning and AI

One of the recurring topics was using machine learning and AI with audio. Obviously plenty of people have seen the astonishing results from Dalle·E 2 and Midjourney and wondered whether the same principles could apply to audio, with computers generating complete musical compositions from a simple textual prompt.

As interesting as that would be, most of the sessions involving machine learning applied it to more focused problems such as pitch detection, virtual analog modeling, and timbre transfer. One of the hot topics was "Differentiable Digital Signal Processing" which can be used to resynthesize audio.

There seems to be a lot of effort in making ML more accessible to audio programmers. Often audio tools are written in C++ while machine learning tends to lean heavily on Python, so we need ways of bridging the gap between the worlds and making it much easier to incorporate a machine learning model into an application written in another language.

I particularly enjoyed the demo of Syntheon by Hao Hao Tan which could listen to a synthesizer sound and attempt to recreate it using Vital with dexed as a work in progress - I could see that as forming the basis for a very useful sound design tool.

DSP and maths

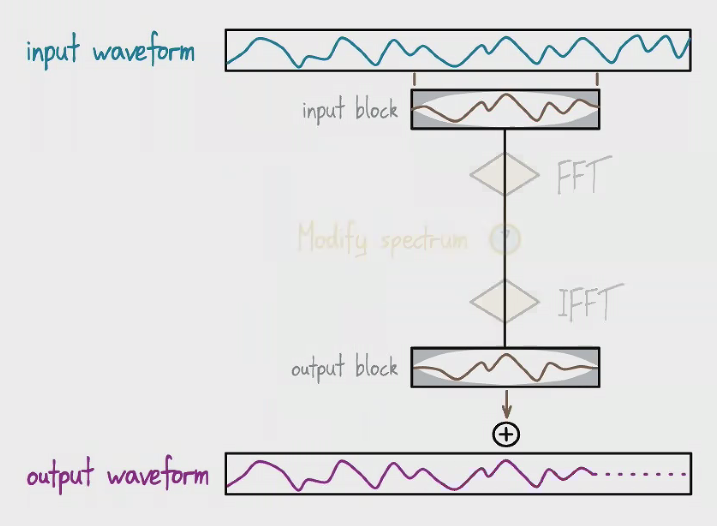

There was no surprise that there was a lot of DSP and maths at the conference. The DSP workshop from dynamic cast was an excellent introduction to some of the basics of sampling, filters and FFTs, and gave me a chance to use MATLAB for the first time.

For the most part the speakers did a great job of keeping some very complex maths accessible. Two of my favourite presentations covered pitch shifting. Xavier Riley gave a great overview of how auto-tune works - a talk I could have done with hearing a decade ago when I blundered my way to my own autotune implementation in .NET.

For me, the best presentation was from Geraint Luff of Signalsmith audio showing how to create a hybrid polyphonic shifting algorithm combining the benefits of two other approaches. I've followed his work for several years thanks to his brilliant free JSFX effects for REAPER and he has a remarkable knack of making some very clever algorithms seem straightforward.

Languages and libraries

I was also very excited to learn about the new CMajor language (hopefully the topic for a separate post shortly). CMajor is an audio-specific DSL, and I had it up and running in under a minute thanks to the CMajor VS Code extension. The big challenge of course is for a language like this to get traction in the industry, but I think there is huge potential if it does catch on.

There were several talks showcasing audio libraries. Obviously as author of an audio library I know what an undertaking this can be. One that stood out was CHOC from Julian Storer, which is a header-only collection of miscellaneous useful things. I liked the simplicity of his approach - keeping it very simply to only use the bits you need. On the other end of the spectrum, there is JUCE which is a much more fully featured framework for building audio plugins in all the most common formats. There's also a DSP library from Signalsmith that looks promising.

It's great to see a healthy ecosystem of audio-related libraries that take some of the pain out of audio development (which I'll discuss more a bit later).

GPU Audio

GPU Audio were one of the main sponsors of the conference, and their recent demonstrations of real-time plugins working in your DAW at low latencies has caused a lot of excitement (and maybe a bit of skepticism too!). Personally, I think it will be a great step forward if we can push at least a portion of the heavy lifting of audio effects onto the GPU, which is often sat pretty idle while the CPU does most of the work. I will be watching the progress closely over the coming years.

Web Audio

Several talks focused on audio on the web, including helpful advice for dealing with pain points such as synchronization and tempo. The already impressive Web Audio Modules now has a version 2.0, and opens possibilities for DAWs hosted in the browser.

Whilst I'm sure that serious music producers will still prefer a native DAW for many years to come, it's quite possible that the next generation will start their journey in the browser on tablets and phones, and may never feel the need to transition away.

MIDI 2.0

There were a number of talks on MIDI 2.0, which has been a very long time coming, and still isn't completely finalized yet. It was good to see Apple, Google and Microsoft all presenting on what support they are already building at the OS level for the new protocol.

As nice as the new additions to MIDI 2.0 are, it's success is very much tied to how many manufacturers are willing to embrace it. The more software and hardware products that support it, the valuable it will become. So hopefully in the coming years we'll start to see to momentum building around it.

Network Audio

Finally, a few sessions ELK talked about the challenges of delivering low latency audio over the network. Dante were also sponsors and discussed their DAL SDK. Both are very impressive technologies (with quite different use cases), but I'd really love to see completely open standards emerge in this area that are widely supported by hardware manufacturers, allowing you to easily form real-time audio networks both on premises and over the internet.

Audio programming is (still) really hard

One of the most unfortunate things about audio programming is that it is still very difficult to get into. The majority of development is extremely focused around C++, which is a frustrating barrier to entry for developers from other languages. Also, you quickly run into the challenges of supporting multiple competing standards and trying to write applications that run cross-platform.

Then there's the complex maths and DSP. In Stefano D'Angelo's excellent "LEGOfying audio DSP Engines" talk he illustrated this really well by explaining all the considerations that go into something as seemingly trivial as implementing a volume slider (oh and by the way he's also created an audio DSL and a DSP library). And if you want to make something even a little more exciting than a volume slider, chances are you're going to have to learn about filter design, FFTs, and even circuit emulation and machine learning.

That's one of the reasons I'm excited about the potential of CMajor, which offers as one of its features to convert your code into a plugin with JUCE. They're not the only people to think of this idea. David Zicarelli of Cycling 74 who was one of the keynote speakers, demoed his RNBO product which lets you create instruments and effects in a graphical interface, but export them to plugin or web audio format. Blue Cat Audio's Plug'n Script is another audio DSL that allows for rapid prototyping and creating of effects. Tools like this have the potential to open up the world of creating custom effects to a much wider audience.

Summary

I thoroughly enjoyed my experience attending ADC, so a big thank you to the organizers and speakers. In particular, I thought they did a great job of making the virtual attendance experience as smooth as it could be. It's great to see how much innovation is happening in the audio development space. But I also hope that the barrier to entry continues to reduce to make it much easier for beginner developers to implement their own creative ideas for audio-related apps without needing years of training first.

By the way, I'm currently in the process of replacing the commenting system on this blog (got fed up with Disqus' awful clickbaity ads), so apologies if you wanted to comment. In the interim, you can always discuss with me @mark_heath on Twitter.

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...