How to use Azure Blob Storage with Azure Web Sites and MVC 4

I have been building a website recently using Azure Web Site hosting and ASP.NET MVC 4. As someone who doesn’t usually do web development, there has been a lot of new stuff for me to learn. I wanted to allow website users to upload images, and store them in Azure. Azure blob storage is perfect for this, but I discovered that a lot of the tutorials assume you are using Azure “web roles” instead of Azure web sites, meaning that a lot of the instructions aren’t applicable. So this is my guide to how I got it working with Azure web sites.

Step 1 – Set up an Azure Storage Account

This is quite straightforward in the Azure portal. Just create up a storage account. You do need to provide an account name. Each storage account can have many “containers” so you can share the same storage account between several sites if you want.

Step 2 – Install the Azure SDK

This is done using the Web Platform Installer. I installed the 1.8 version for VS 2012.

Step 3 – Setup the Azure Storage Emulator

It seems that with Azure web role projects, you can configure Visual Studio to auto-launch the Azure Storage emulator, but I don’t think that option is available for regular ASP.NET MVC projects hosted on Azure web sites. The emulator is csrun.exe and it took some tracking down as Microsoft seem to move it with every version of the SDK. It needs to be run with the /devstore comand line parameter:

C:\Program Files\Microsoft SDKs\Windows Azure\Emulator\csrun.exe /devstore

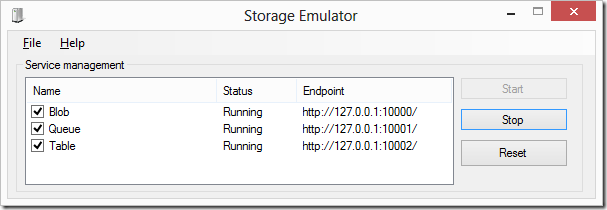

To make life easy for me, I added an option to my External Tools list in Visual Studio so I could quickly launch it. Once it starts up, a new icon appears in the system tray, giving you access to the UI, which shows you what ports it is running on:

Step 4 – Set up a Development Connection String

While we are in development, we want to use the emulator, and this requires a connection string. Again, most tutorials assume you are using an “Azure Web Role”, but for ASP.NET MVC sites, we need to go directly to our web.config and enter a new connection string ourselves. The connection string required is fairly simple:

<connectionStrings>

<add name="StorageConnection" connectionString="UseDevelopmentStorage=true"/>

</connectionStrings>

Step 5 – Upload an image in ASP.NET MVC 4

This is probably very basic stuff to most web developers, but it took me a while to find a good tutorial. This is how to make a basic form in Razor syntax to let the user select and upload a file:

@using (Html.BeginForm("ImageUpload", "Admin", FormMethod.Post, new { enctype = "multipart/form-data" }))

{

<div>Please select an image to upload</div>

<input name="image" type="file">

<input type="submit" value="Upload Image" />

}

And now in my AdminController’s ImageUpload method, I can access details of the uploaded file using the Request.Files accessor which returns an instance of HttpPostedFileBase :

[HttpPost]

public ActionResult ImageUpload()

{

string path = @"D:\Temp\";

var image = Request.Files["image"];

if (image == null)

{

ViewBag.UploadMessage = "Failed to upload image";

}

else

{

ViewBag.UploadMessage = String.Format("Got image {0} of type {1} and size {2}",

image.FileName, image.ContentType, image.ContentLength);

// TODO: actually save the image to Azure blob storage

}

return View();

}

Step 6 – Add Azure references

Now we need to add a project reference to Microsoft.WindowsAzure.StorageClient, which gives us access to the Microsoft.WindowsAzure and Microsoft.WindowsAzure.StorageClient namespaces.

Step 7 – Connect to Cloud Storage Account

Most tutorials will tell you to connect to your storage account by simply passing in the name of the connection string:

var storageAccount = CloudStorageAccount.FromConfigurationSetting("StorageConnection");

However, because we are using an Azure web site and not a Web Role, this throws an exception ("SetConfigurationSettingPublisher needs to be called before FromConfigurationSetting can be used"). There are a few ways to fix this, but I think the simplest is to call Parse, and pass in your connection string directly:

var storageAccount = CloudStorageAccount.Parse(

ConfigurationManager.ConnectionStrings["StorageConnection"].ConnectionString);

Step 8 – Create a Container

Our storage account can have many “containers”, so we need to provide a container name. For this example, I’ll call it “productimages” and give it public access.

blobStorage = storageAccount.CreateCloudBlobClient();

CloudBlobContainer container = blobStorage.GetContainerReference("productimages");

if (container.CreateIfNotExist())

{

// configure container for public access

var permissions = container.GetPermissions();

permissions.PublicAccess = BlobContainerPublicAccessType.Container;

container.SetPermissions(permissions);

}

The name you select for your container actually has to be a valid DSN name (no capital letters, no spaces), or you’ll get a strange “One of the request inputs is out of range” error.

Note: the code I used as the basis for this part (the Introduction to Cloud Services lab from the Windows Azure Training Kit) holds the CloudBlobClient as a static variable, and has the code to initialise the container in a lock. I don’t know if this is to avoid a race condition of trying to create the container twice, or if creating a CloudBlobClient is expensive and should only be done once if possible. Other accesses to CloudBlobClient are not done within the lock, so it appears to be threadsafe.

Step 9 – Save the image to a blob

Finally we are ready to actually save our image. We need to give it a unique name, for which we will use a Guid, followed by the original extension, but you can use whatever naming strategy you like. Including the container name in the blob name here saves us an extra call to blobStorage.GetContainer. As well as naming it, we must set its ContentType (also available on our HttpPostedFileBase) and upload the data which HttpPostedFileBase makes available as a stream.

string uniqueBlobName = string.Format("productimages/image_{0}{1}", Guid.NewGuid().ToString(), Path.GetExtension(image.FileName));

CloudBlockBlob blob = blobStorage.GetBlockBlobReference(uniqueBlobName);

blob.Properties.ContentType = image.ContentType;

blob.UploadFromStream(image.InputStream);

Note: One slightly confusing choice you must make is whether to create a block blob or a page blob. Page blobs seem to be targeted at blobs that you need random access read or write (maybe video files for example), which we don’t need for serving images, so block blob seems the best choice.

Step 10 – Finding the blob Uri

Now our image is in blob storage, but where is it? We can find out after creating it, with a call to blob.Uri:

blob.Uri.ToString();

In our Azure storage emulator environment, this returns something like:

http://127.0.0.1:10000/devstoreaccount1/productimages/image_ab16e2d7-5cec-40c9-8683-e3b9650776b3.jpg

Step 11 – Querying the container contents

How can we keep track of what we have put into the container? From within Visual Studio, in the Server Explorer tool window, there should be a node for Windows Azure Storage, which lets you see what containers and blobs are on the emulator. You can also delete blobs from there if you don’t want to do it in code.

The Azure portal has similar capabilities allowing you to manage your blob containers, view their contents, and delete blobs.

If you want to query all the blobs in your container from code, all you need is the following:

var imagesContainer = blobStorage.GetContainerReference("productimages");

var blobs = imagesContainer.ListBlobs();

Step 12 – Create the Real Connection String

So far we’ve done everything against the storage emulator. Now we need to actually connect to our Azure storage. For this we need a real connection string, which looks like this:

DefaultEndpointsProtocol=https;AccountName=YourAccountName;AccountKey=YourAccountKey

The account name is the one you entered in the first step, when you created your Azure storage account. The account key is available in the Azure Portal, by clicking the “Manage Keys” link at the bottom. If you are wondering why there are two keys, and which to use, it is simply so you can change your keys without downtime, so you can use either.

Note: most examples show DefaultEndpointsProtocol as https, which as far as I can tell, simply means that by default the Uri it returns starts with https. This doesn’t stop you getting at the same image with http. You can change this value in your connection string at any time according to your preference.

Step 13 – Create a Release Web.config transform

To make sure our live site is running against our Azure storage account, we’ll need to create a web.config transform as the Web Deploy wizard doesn’t seem to know about Azure storage accounts and so can’t offer to do this automatically like it can with SQL connection strings.

Here’s my transform in Web.Release.config:

<connectionStrings>

<add name="StorageConnection"

connectionString="DefaultEndpointsProtocol=https;AccountName=YourAccountName;AccountKey=YourAccountKey"

xdt:Transform="SetAttributes" xdt:Locator="Match(name)"/>

</connectionStrings>

Step 14 – Link Your Storage Account to Your Web Site

Finally, in the Azure portal, we need to ensure that our web site is allowed to access our storage account. Go to your websites, select “Links” and add a link you your Storage Account, which will set up the necessary firewall permissions.

Now you're ready to deploy your site and use Azure blob storage with an Azure Web Site.

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...

Comments

Great write up. Really easy to follow and I'm up and running in no time. I was prototyping a new website feature to allow users to upload files and I wanted to host it on Azure websites just to demo it to a client. But, uploading files to Azure websites was a bit of a black hole to me. Seriously, your article was perfect...Thanks!

Paul Apostolosthanks Paul, this is one of those "note to self" posts - wanted to be sure I'd remember what I'd done.

Mark HThanks for this 'note to self', it helped me out because I forgot to set permissions on my container to public. Now I can stop repeatedly banging my head against the wall.

Mark GrayGood job mate.

Thanks for this 'note to self', it helped me out because I forgot to set permissions on my container to public. Now I can stop repeatedly banging my head against the wall.

Mark GrayGood job mate.

This is just what I was looking for, thanks.

JoelVery good to find your blog i am going to follow it, very nice article

Naveed AhmadThank you for the post. I have a TFS project that was an MVC EF site converted to Azure. I couldn't actually get it to run on my Visual Studio 2013 development environment. I can tell by your examples that the previous developer did what you did. My problem however is that we are moving the website and database off Azure and quite frankly it isn't going well and I believe it's due to blob storage and local development emulators. Anyhow your post is helping me greatly in reverse engineering the solution to non-Azure. Why get off azure you may ask. We are on free trial's for everything except a backup database. The bill for that was 256 for one month. Once the bills for everything else starts kicking in Microsoft will own our business.

Anonymous