Simple HTTPS with Caddy Ingress on AKS

I recently watched the Deploying ASP.NET Core 6 Using Kubernetes Pluralsight Course by Marcel de Vries. It's an excellent course, and I particularly appreciated that he devotes a module to improving the security of the application, which is something that is often omitted in demos.

To enable SSL for the frontend website in his demo app, he used the Caddy webserver, which has the impressive capability of automatically obtaining and renewing TLS certificates for your sites.

I was impressed with how simple it was to set up, and decided to give it a try on my GloboTicket Dapr demo application that I used for my Dapr 1 Fundamentals Pluralsight course. In that demo, I had just used a LoadBalancer service to give me a public endpoint, but I hadn't set up HTTPs as I wanted to keep it as simple as possible for people to follow along and copy what I'd done (and from my experience anything involving certificates gets complicated very fast).

In this post, I'll run through the steps I took to update my GloboTicket Dapr demo application to use Caddy for ingress on AKS.

Services, Ingress, and Ingress Controllers

One thing that is valuable to understand is the difference between the various Kubernetes resource types such as services, ingress and ingress controllers. This can feel overwhelming at first, so do spend some time reading up on the difference between them.

For our purposes, we're going to need one of each. We will need a "service" for our frontend application, which originally in GloboTicket I'd set up as a LoadBalancer in order to get a public IP address, but I changed that back to ClusterIP. The definition below (from frontend.yaml) shows my new service that will forward on traffic on port 8080 to my "frontend" containers which are listening on port 80.

kind: Service

apiVersion: v1

metadata:

name: frontend

labels:

app: frontend

spec:

selector:

app: frontend

ports:

- protocol: TCP

port: 8080

targetPort: 80

type: ClusterIP

I updated my service definition on the cluster with kubectl apply -f .\frontend.yaml.

We also need an "ingress controller" (which will use Caddy), and then an "ingress" which basically dictates the rules governing which domain names will map to which services. Let's see how to add them next...

Installing the Caddy Ingress Controller

To install the Caddy ingress controller we're going to follow the instructions here. I chose to use helm, but you may prefer the option that generates a Kubernetes YAML file that you can modify yourself.

Note that we need to provide an email address, and I've also added an "annotation" I want on the Caddy load balancer, which I'll explain shortly.

# create a namespace

kubectl create namespace caddy-system

# install Caddy

$MY_EMAIL="[email protected]" # needed for LetsEncrypt - update with your email

$DNS_PREFIX="globoticket123" # need a unique value here

helm install `

--namespace=caddy-system `

--repo https://caddyserver.github.io/ingress/ `

--atomic `

mycaddy `

caddy-ingress-controller `

--set ingressController.config.email=$MY_EMAIL `

--set loadBalancer.annotations."service\.beta\.kubernetes\.io/azure-dns-label-name"=$DNS_PREFIX

Note: this initially failed for me and I had to run

helm repo updatefirst, beforehelm install

Create an Ingress

Although we have an ingress controller, we don't have any ingresses defined yet. These allow us to map a domain name to a service. We have a choice for the domain name.

First, we can use our own custom domain name. I might choose something like globoticket123.markheath.net for example. We'd want this for a production cluster.

But second, we can take advantage of a special feature of AKS that will give us a domain name for any load-balancer. That's what the service.beta.kubernetes.io/azure-dns-label-name annotation does. If I set that to "globoticket123" on a load-balancer service, then I'll get a domain name like globoticket123.eastus.cloudapp.azure.com. This is super convenient for dev/test scenarios where you don't really need a nice-looking domain name.

And of course, there's nothing stopping you defining rules for multiple domain names. In this ingress definition below, I'm saying that globoticket123.markheath.net should be handled by the "frontend" service which is listening on port 8080 (as defined in our frontend.yaml above).

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: globoticket

namespace: default

annotations:

kubernetes.io/ingress.class: caddy

spec:

rules:

- host: globoticket123.markheath.net

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: frontend

port:

number: 8080

I can apply this to my cluster with kubectl apply:

kubectl apply .\ingress.yaml

Configure DNS Records

Now that we have everything set up on our cluster, we need to configure our DNS records. If I chose the option of the azure-dns-label-name option, I don't necessarily have to do anything else. I can visit my site on https://globoticket123.eastus.cloudapp.azure.com and Caddy will automatically fetch a certificate for us.

But if I have my own domain name, then I either need to find out the external IP of the caddy load-balancer (you can discover this with kubectl get svc -n caddy-system), and then set up an A record to point my domain name (e.g. globoticket123.markheath.net at that IP address), or I could set up a CNAME record that maps globoticket123.markheath.net onto globoticket123.eastus.cloudapp.azure.com.

Obviously I need to make sure that my ingress.yaml rule uses the domain name that I plan to use to access the site.

Testing it out

If I visit the domain name I pointed at the Caddy load balancer service with an A record, then we can see that my browser is happy with the certificate (and Caddy will also automatically redirect us to HTTPS). (Note that I'm using Cloudflare, which can generate its own certificates, but you still need a valid certificate at the "origin" server, which Caddy is able to provide)

And I can also access using the domain name that AKS provides thanks to my annotation (and the fact that I configured my ingress with a rule for both domain names).

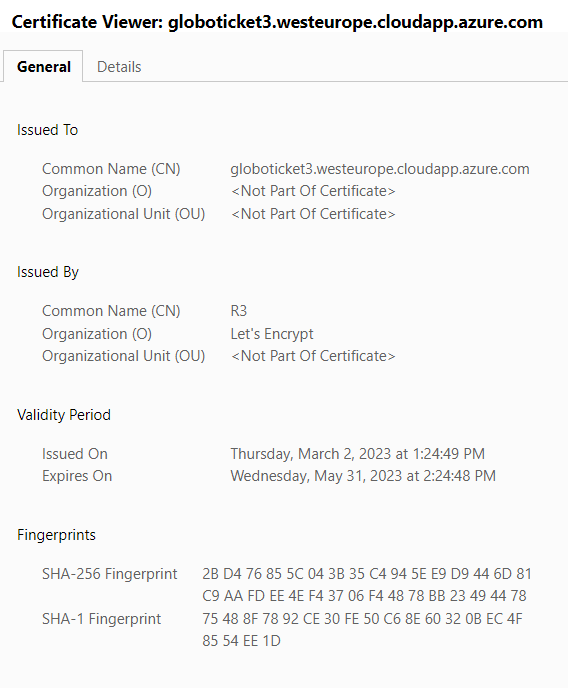

If we look at the certificate for that domain we can see that Caddy has indeed gone off to Let's Encrypt and retrieved a certificate for us:

Summary

Overall I was very impressed with how easy it was to set this all up. It took me about five minutes in total, and worked instantaneously. Of course, this is not the only way to configure HTTPS. A more common approach might be to use nginx as your ingress controller and install cert-manager onto your cluster, which is described in this tutorial on Microsoft Learn.

If you'd like to check out what I did in more detail, the updated AKS deployment scripts and Kubernetes YAML files can be found on the caddy-ingress branch in my demo project. Next time I re-record the AKS installation demo, I'll likely update the Pluralsight course to show this configuration as well.

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...