Run Azure Functions in a Docker Container

One of the great things about version 2 of the Azure Functions runtime, is that it runs on .NET Core, which means it is cross-platform. This is great for anyone wanting to use the Azure Functions Core Tools on a non-Windows platform, but it also opens up the possibility of running your Azure Function App in a Docker container.

Why Docker?

Now you might be wondering - why would you even want to do this? After all, the "consumption plan" is a superb way to host your Function App, bringing you all the benefits of serverless - you don't need to provision any infrastructure yourself, you pay only while your functions are running, and you get automatic scale out.

None of this is true if you choose to host your Function App in a Docker container. However, it does open the door to hosting in a lot more environments than were previously possible. You can use it to host Function Apps on premises or in other cloud providers for example. Or maybe you're using something like AKS for all your other services, and would just like the consistency of everything being packaged as a Docker container.

Creating a Dockerfile

The easiest way to create a Dockerfile for an Azure Function app is to install the Azure Functions Core Tools (you will need v2), and run the func init --docker command.

This will prompt you for what worker runtime you want - the choices are currently dotnet, node or java - choose dotnet if you're writing your functions in C# or F#, and node if you're writing in JavaScript.

This will create a new empty Function App, including a Dockerfile. The only real difference is based on what worker runtime we chose as there's a different base image used - for dotnet its microsoft/azure-functions-dotnet-core2.0 and for node it's microsoft/azure-functions-node8.

Here's the Dockerfile it creates for a node Function App. It simply sets an environment variable and then copies everything in to the home/site/wwwroot folder.

FROM microsoft/azure-functions-node8:2.0

ENV AzureWebJobsScriptRoot=/home/site/wwwroot

COPY . /home/site/wwwroot

I took a very slightly different approach for my C# Function App, allowing me to do a docker build from the root directory of my project, and copying the contents of the release build of my Function App. Obviously its just a matter of preference how you set this up:

FROM microsoft/azure-functions-dotnet-core2.0:2.0

ENV AzureWebJobsScriptRoot=/home/site/wwwroot

COPY ./bin/Release/netstandard2.0 /home/site/wwwroot

Build and run locally

Building the container is very simple - just issue a docker build command, and give it a name and tag:

docker build -t myfuncapp:v1 .

And then to run your Docker container locally, it's again very straightforward. You might want to expose a port if you have HTTP triggered functions, and set up any environment variables to connection strings your function needs:

docker run -e MyConnectionString=$connStr -p 8080:80 myfuncapp:v1

Try it out in Azure Container Instances

Once you've created your Docker image, you can easily get it up and running in Azure, with Azure Container Instances and the Azure CLI (check out my Pluralsight courses on ACI and Azure CLI for tutorials on getting started with these).

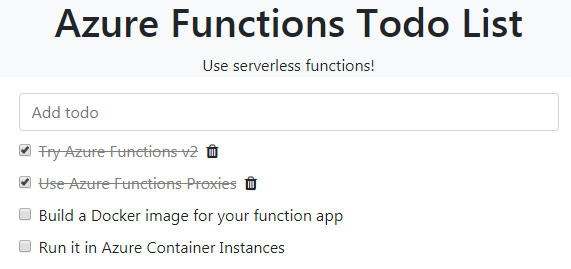

I've create a very simple Azure Functions demo app, and created a container for it which is available here. It implements a simple TODO API, and it also uses Azure Functions proxies to proxy through to a static webpage (hosted externally in blob storage) that can be used to test the API. It also stores its data in table storage, so it does need a storage account.

Here's some PowerShell that uses the Azure CLI to create a resource group and a storage account (to store the todo items), and then creates a container to run the function app. The container needs two environment variables - one to tell it the connection string of the storage account, and one to tell our proxy where to find the static web content, currently I've put it in a public blob container available at https://serverlessfuncsbed6.blob.core.windows.net/website but that won't necessarily be available forever, so if you want to try this out yourself, you may need to copy the website's static HTML, CSS and JavaScript content from my GitHub repository to a public blob and set the proxy destination to point there.

# Create a resource group to store everything in

$resGroup="serverless-funcs-docker2"

$location="westeurope"

az group create -n $resGroup -l $location

# create a storage account

$storageAccount="serverlessfuncsdocker2"

az storage account create -n $storageAccount -g $resGroup -l $location --sku Standard_LRS

# get the connection string

$connStr=az storage account show-connection-string -n $storageAccount -g $resGroup --query connectionString -o tsv

# create a container

# point it at the storage account (AzureWebJobsStorage) and at static web content (WEB_HOST)

$containerName="serverless-funcs-2"

$proxyDestination="https://serverlessfuncsbed6.blob.core.windows.net/website"

az container create `

-n $containerName `

-g $resGroup `

--image markheath/serverlessfuncs:v2 `

--ip-address public `

--ports 80 `

--dns-name-label serverlessfuncsv2 `

-e AzureWebJobsStorage=$connStr `

WEB_HOST=$proxyDestination

# check if the container has finished provisioning yet

az container show -n $containerName -g $resGroup --query provisioningState

# get the domain name (e.g. serverlessfuncsv2.westeurope.azurecontainer.io)

az container show -n $containerName -g $resGroup --query ipAddress.fqdn -o tsv

# check the container logs

az container logs -n $containerName -g $resGroup

# to clean up everything (ACI containers are paid per second, so you don't want to leave one running long-term)

az group delete -n $resGroup -y

If all goes well, here's what you should see. The Function App also has a scheduled function that deletes completed tasks every five minutes:

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...

Comments

I'm finding it difficult to draw parity between Azure Functions Runtime within the container and the Function Apps proper that runs on the Azure App Service. In particular, the utility of the simple function key authorization via "AuthorizationLevel.Function" on the HTTP bindings seems non-operable once inside a containerized runtime; we're stuck running anonymous and delegating security to a proxy handler and internalizing the function apps on a private vnet. One of my primary goals was to compose a multi-service architecture locally for development. It seems like Function App minus the consumption model and easy binding quickly becomes more arduous than rolling the whole thing in ASP.NET Core Web API. Pleaded a case for overriding function keys for containers here: https://feedback.azure.com/...

Matthew MorganYes, seems like the security story for functions in containers could do with some improvements

Mark HeathIs there any way to add it from the Visual Studio 2017. For function app project, I do not see the option Project>>Add>>Add Docker like .net core projects.

AtanuNot sure I'm afraid, but it's very easy to add with the CLI. There's a new `func init --docker-only` option that just creates the Dockerfile. You might find that VS2019 has got some UI support for this.

Mark HeathIf the whole point is running it on premises, which makes sense, what do I use in replacement of a Storage Account in Azure? I haven't been able to find this anywhere, and without this the setup will not be able to run on premises (without involving a public cloud anyway). Please assist.

Michael Ringholm SundgaardI wouldn't say the whole point is running it on premises, but you're right if you don't want to connect out, then you can't use an Azure Storage account. But you don't need one. The functions runtime will work just fine without one. But most Azure Functions triggers and bindings connect to Azure resources, so you'd need to find alternative bindings if everything must be on premises.

Mark HeathTrying to publish within VS2019 was failing (shared disks) but it created the following Dockerfile: https://pastebin.com/4TufC4Rz

confuseledcan you see why that pushes to azure but without any functions listed & wwwroot empty? The following are run from the directory with the csproj/sln/cs files

docker build -t $arc_id/testfunc:v1

docker push $acr_id/testfunc:v1

In theory you can also run an instance of Azurite in the/a container as a replacement of the Azure resources. Atleast for blob / table storage and queue's that is.

Michael OverhorstBut it will depend on the usecase if this is acceptable, in the end it's an emulator, ment for local development. So something to keep in mind.